.*com\\.broadleaf.* .*org\\.spring.*

- v1.0.0-latest-prod

Spring Cloud Stream offers limited strategies for error handling and retry. On its own, the behavior defaults to leveraging Spring Retry for blocking, in-memory retry using a Spring Retry Template (defaults to 3 attempts in fairly quick succession). If all 3 attempts fail, the last exception is logged to the console and the message is dropped. In some cases this is adequate, but in other cases, a more robust handling mechanism is required. Here are several drawbacks regarding the default behavior:

The retries are in quick succession. The elapsed time for retry may not be enough to cover longer, temporary outage scenarios. The retry and backoff settings here tend to try to strike a balance between retry coverage and not overly blocking a busy message consuming channel.

The message is lost. While logging does take place, it is sometimes advantageous to review message contents in more detail and/or re-introduce the message at a later time - at which point it may succeed.

As of release train versions 2.0.4/2.1.3, Broadleaf has introduced additional message resiliency support through simplified/unified configuration for Spring Cloud Stream across several binder types. This includes opt-in configuration for existing framework message bindings, as well as support for new bindings introduced in individual implementations.

When talking about retry in terms of Kafka, the new error handling support takes on two different forms: Non-Blocking and Blocking.

|

Note

|

While the notion of blocking/non-blocking retry may not apply directly to Google PubSub, the same configuration shown below for "Non-Blocking Retry" and "Blocking With Spring Retry" may be used to achieve similar configurations and results for PubSub as those possible with Kafka. Pubsub configuration also leverages a number of publisher/subscriber settings that should be fine-tuned. See here and here for more details and overall PubSub best practices (noting that Broadleaf already includes transactional, durable message publishing as a feature out-of-the-box).

|

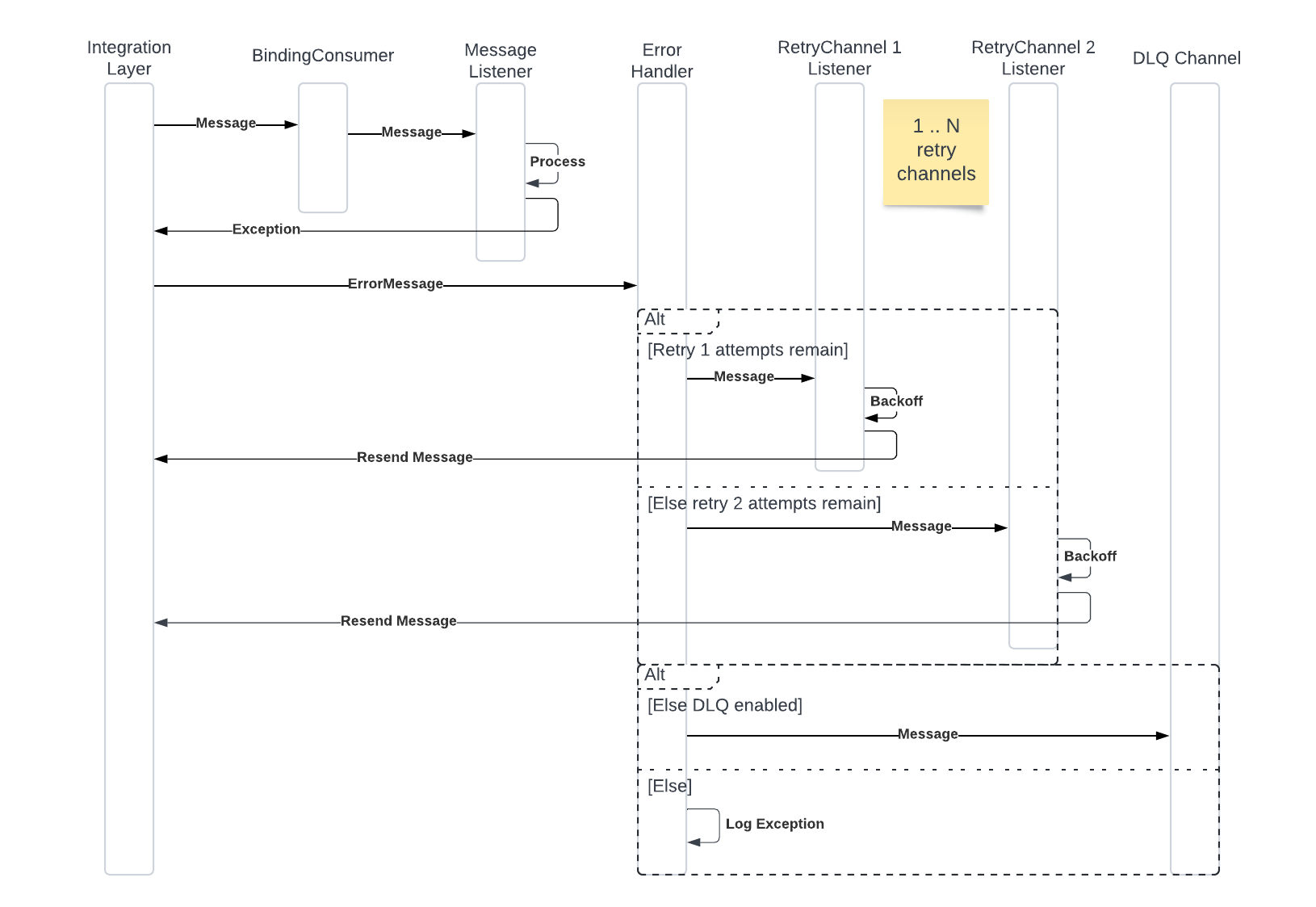

Non-blocking retry favors not blocking processing of messages on the main consumption binding in order to handle retries. Instead, failed messages are forwarded to separate channels (where all messages have the same backoff duration) for failure retry. Additional channels may be added to achieve different backoff intervals. Once all retry channels are exhausted for attempt count, then the message is either forwarded to a DLQ, or the last exception is logged to the console. For those familiar with Spring Kafka support, this is functionally similar to @RetryableTopic.

|

Note

|

In Broadleaf specific retry configuration, a DLQ is just another topic in the message broker where failed messages are stored at the end of their retry lifecycle. There is nothing otherwise special or notable about the topic. |

The main advantages of non-blocking retry include:

Longer backoff intervals are achievable without sacrificing throughput on the main channel

Messages are not lost if forwarded to a DLQ

Disadvantages include:

Any presumption of ordered delivery is lost. However, this is generally not an issue for Broadleaf framework message flows, as ordered delivery is not a prerequisite.

|

Important

|

If robust retry handling is required, non-blocking retry is considered best practice. |

When the non-blocking retry feature is enabled, several components are automatically wired up for you. These include a functionally rich error handler, 1 or more retry channel bindings, and a DLQ producer binding (if applicable).

Figure 1.1 : Non-Blocking Retry Flow

|

Important

|

The BindingConsumer is aware of failed messages upon entry and will only deliver the message to consuming functions qualified for the consumer group in which the original message failure occurred.

|

Refer to Non-Blocking Retry Properties for configuration options.

The acknowledgement semantics in standard Spring Cloud Stream consumption are favorable for reliable retry in the case of catastrophic failure (e.g. The JVM exits while processing a message). In this case, the message processing does not produce an ack since it never finished. This will result in a subsequent delivery attempt. However, many message driven flows in Broadleaf leverage IdempotentMessageConsumptionService, which will generally look for a previous lock on the idempotency id before allowing processing to continue. Because of this, the retry after the catastrophic failure will not be allowed. In some cases, this may be the desired behavior (e.g. if it is impossible to repeat execution of the message processing in an idempotent way). However, in cases where a retry of the message processing is desirable, the incomplete lock takeover feature is offered. Lock takeover follows this basic logic:

A lock for idempotency is initially created upon first message consumption attempt without completion status

When processing completes, a separate lock for the same idempotency key is created with a COMPLETE status

Other attempts for processing may be requested (e.g. a retry attempt)

If a COMPLETE status lock is available for the idempotency key, the additional attempt is denied as a standard idempotency failure

If a COMPLETE status lock is NOT available, the creation timestamp of the initial lock is compared against a configured stagnation threshold. If determined to be stagnant, the initial lock is released and a new lock is allowed for the new attempt.

|

Important

|

When combined with non-blocking retry, message lock takeover provides a high availability solution to message processing. It allows automated continuation of processing - even in the face of unanticipated JVM exit. |

|

Important

|

When used with non-blocking retry, the retry attempt count and backoff durations should be sufficient to cover in excess of the lock takeover threshold. Otherwise, it’s possible you will run out of retries before a takeover is allowed. |

Refer to Message Lock Takeover Properties for configuration options.

Blocking retry favors immediate message processing resolution over throughput. Messages processing is retried immediately in a way that blocks the main processing stream. This can be favorable in situations where other processes and systems rely on the outcome of the current message consumption, but are also not directly launched or controlled by the current message consumption process. In such a case, it may be imperative that the current process is resolved before continuing. Most commonly, this case arises when a later message on the same topic/partition is dependent on the outcome of the current message.

The main advantages of blocking retry include:

Simpler message plumbing / fewer topics

Ordered delivery is maintained (when supported by the broker)

Disadvantages include:

Reduced throughput when exceptions arise

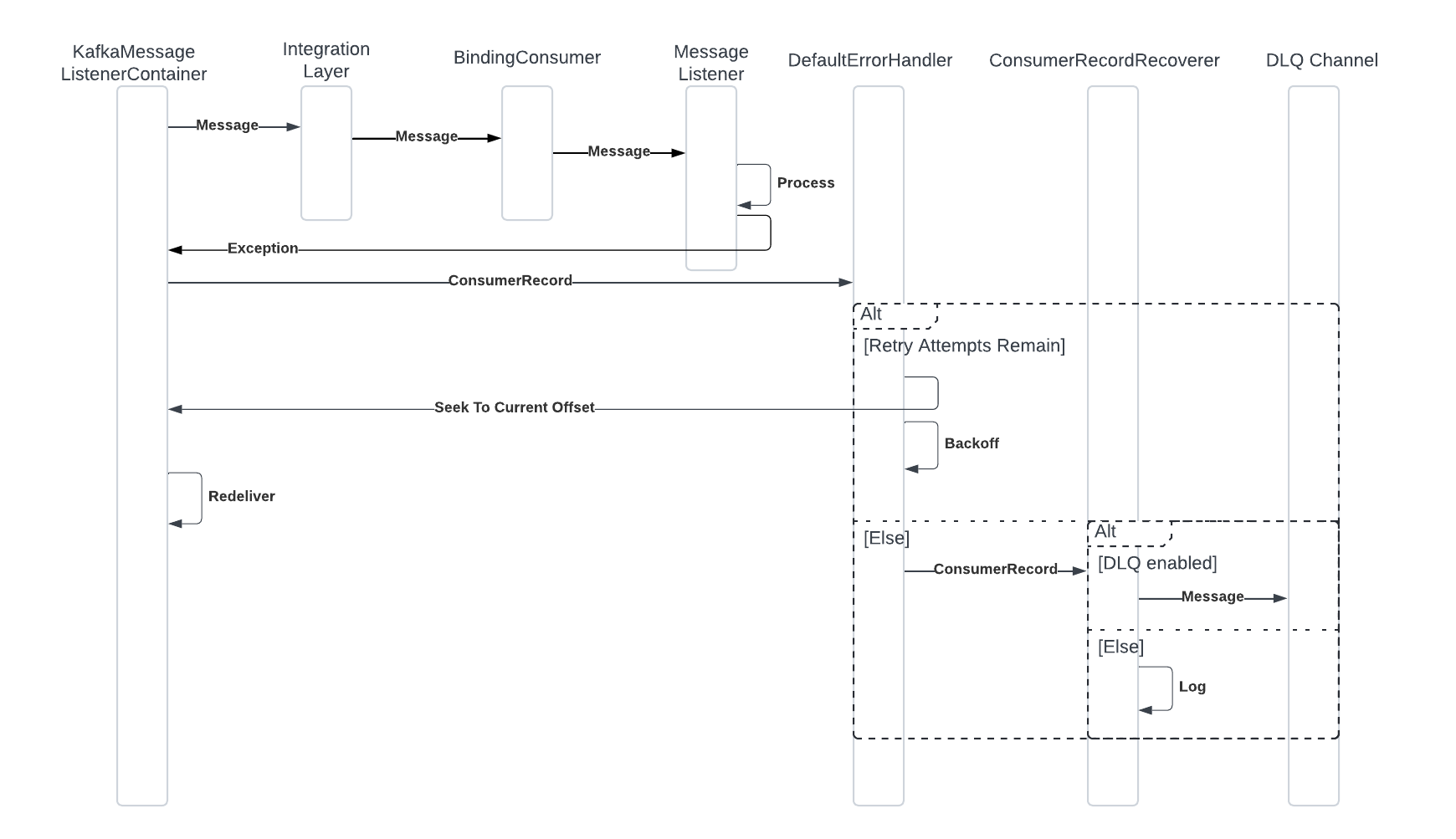

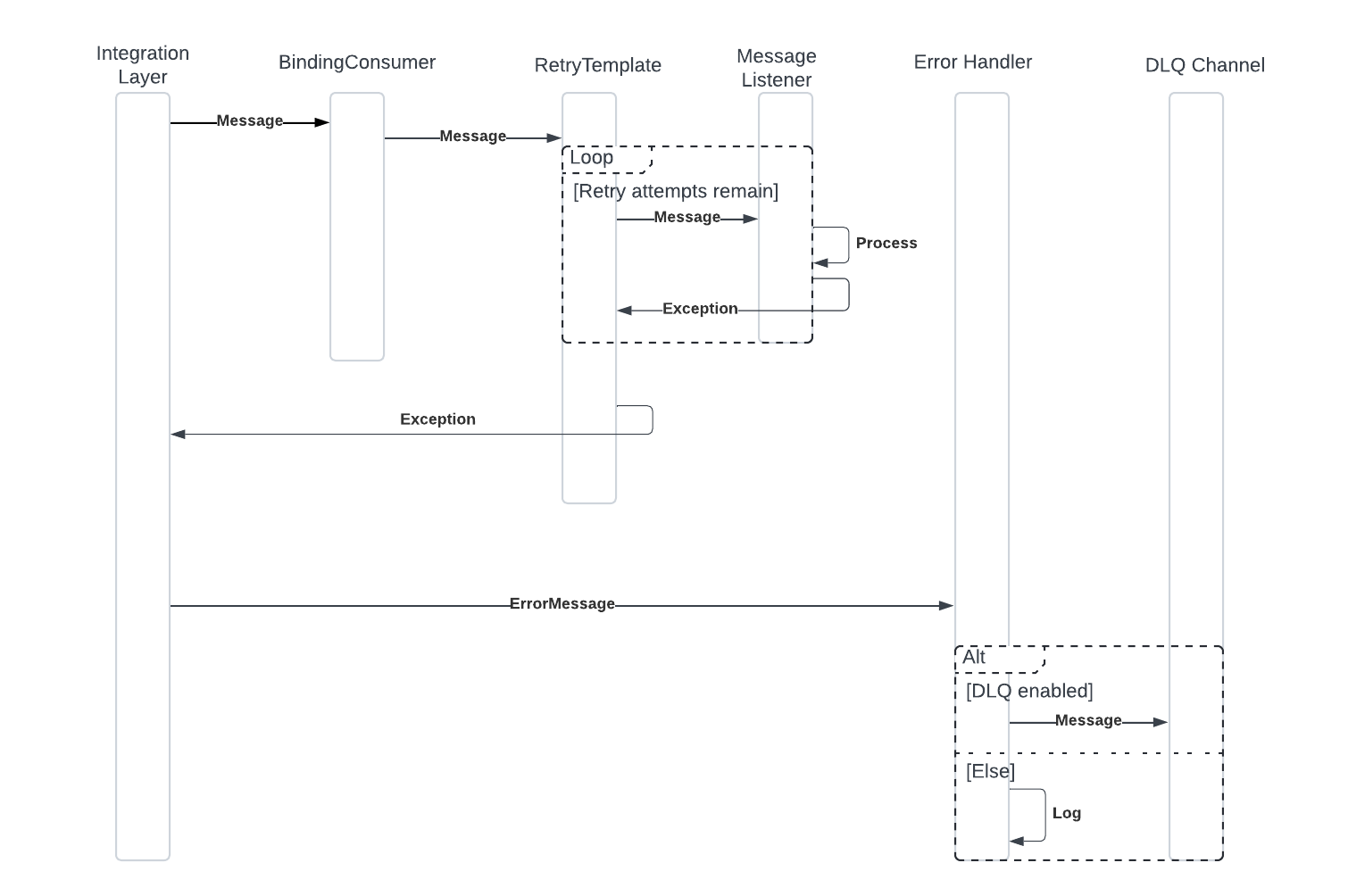

When the blocking retry feature is enabled, several components are automatically wired up for you. These can be variable based on broker type and configuration choices, but include a Spring DefaultErrorHandler instance with retry and exception filtering configuration, a ConsumerRecordRecoverer for DLQ and log handling, a standard ErrorMessage consumer for DLQ handling, and a standard RetryTemplate for in-memory blocking retry and backoff.

Figure 2.1 : Blocking With Kafka And Seek-To-Current-Offset

|

Important

|

If not using non-blocking retry, and the kafka binder is in use for the current message flow, bubbling the exception to the container in conjunction with seek-to-current-offset is considered best practice for extended backoff durations. When using this approach, the issue of extended backoff duration exceeding max.poll.interval.ms and causing a re-balance is mitigated. This behavior is controlled with the broadleaf.messaging.blocking.retry.cloud.stream.(main-consumer-binding).kafka.seek-to-current-record-on-failure property, which is true by default.

|

|

Important

|

The BindingConsumer is aware of failed messages upon entry and will only deliver the message to consuming functions qualified for the consumer group in which the original message failure occurred.

|

Figure 2.2 : Blocking With Spring Retry

In this case, the RetryTemplate is responsible for performing the retries and backoff. This is done in-memory at the point of the Broadleaf BindingConsumer.

|

Important

|

The BindingConsumer is aware of failed messages upon entry and will only deliver the message to consuming functions qualified for the consumer group in which the original message failure occurred.

|

Refer to Blocking Retry Properties for configuration options.

A copy of the failed message is optionally sent to a DLQ at the end of the retry lifecycle. The message is populated with several headers that are useful for research and diagnosis:

FAILURE_TRACE - Contains a filtered stack trace for the last exception that occurred.

FAILURE_MESSAGE - Contains the message for the last exception that occurred.

CONSUMER_BINDING - Contains the original consumer binding name.

GROUP_OVERRIDE - Contains the group in which the failed message was consumed.

The filtering mechanism for the stack trace is configured by default to only include lines matching these regex members:

.*com\\.broadleaf.* .*org\\.spring.*

However, this can be altered, and additional lines included via configuration. Refer to Standard Retry Properties for configuration options.

|

Note

|

The messages in the DLQ (besides the additional headers) are replicas of the original message. As such, they may be re-introduced into the original queue for re-processing, if desired. To do so, this is generally a task handled via broker tooling for managing messages. Tools such as AKHQ (for Kafka) have features that not only allow research and review of messages, but also provide message moving capabilities. For larger volume needs, additional logic may be added to stream messages from a DLQ into another destination. Specific details regarding moving messages for individuals brokers is outside the scope of this document. |

spring:

cloud:

stream:

bindings:

singleIndexRequestOutputOrder:

destination: singleIndexRequest

broadleaf:

messaging:

nonblocking:

retry:

cloud:

stream:

enabled: true

bindings:

singleIndexRequestInputOrder:

- max-attempts: 3

back-off: 1000

consumer-binding: singleIndexRequestInputOrderInputRetry1

producer-binding: singleIndexRequestInputOrderOutputRetry1

original-producer-binding: singleIndexRequestOutputOrder

- max-attempts: 2

back-off: 6000

consumer-binding: singleIndexRequestInputOrderInputRetry2

producer-binding: singleIndexRequestInputOrderOutputRetry2

original-producer-binding: singleIndexRequestOutputOrder

dlq: singleIndexRequestOrderDLQ|

Note

|

This is an interesting case. If running a granular flexpackage, the SearchServices microservice does not contain the original producer for the message. Similarly, in the supporting flexpackage in the balanced configuration, the producer is not included in that flexpackage. As a result, we need to add an output binding (singleIndexRequestOutputOrder) for the granular or supporting flexpackages so that the retry loop can function locally.

|

The order index processing does not leverage IdempotentMessageConsumptionService, since repeat index processing for the order is harmless. No further configuration is required beyond what is shown above.

IdempotentMessageConsumptionService.broadleaf:

message:

lock:

takeover:

enabled: true

include-listener-names:

- UserUpdateListenerCustomerspring:

cloud:

stream:

bindings:

myInput:

destination: mine

group: group

broadleaf:

messaging:

blocking:

retry:

cloud:

stream:

enabled: true

bindings:

myInput:

max-attempts: 3

back-off: 1000

dlq: myInputDLQjava.lang.IllegalStateException and java.lang.IllegalArgumentException) and forward immediately to a DLQ.spring:

cloud:

stream:

bindings:

myInput:

destination: mine

group: group

broadleaf:

messaging:

blocking:

retry:

cloud:

stream:

enabled: true

bindings:

myInput:

max-attempts: 3

back-off: 1000

dlq: myInputDLQ

exclude:

- java.lang.IllegalStateException

- java.lang.IllegalArgumentException